SUMMARIZE

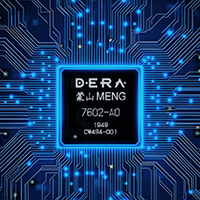

In AI scenarios, model training, inference, and massive data preprocessing impose extremely high demands on storage IOPS, bandwidth, and latency. DERA NVMe SSDs deliver outstanding random read/write performance, high stability, and consistency, fully meeting the requirements of various AI workloads. With built-in data protection and encryption mechanisms, they ensure the security and integrity of core models and training data. Simultaneously, the multi-capacity design enables flexible support for AI storage needs across different scales—from edge inference to large-scale cluster training.

POINT

Data Throughput Bottleneck

Frequent reading of massive small files during training can create I/O bottlenecks, leaving GPUs/NPUs idle and slowing progress while increasing costs.

Storage Capacity & Cost Pressure

Dataset sizes have jumped from TB to PB with large models and multimodal apps. Balancing performance with cost control is now critical.

Inadequate System Reliability

Training jobs often run for weeks. Storage failures interrupt progress, wasting significant time and compute resources.

High Operational Complexity

Scaling, maintaining, and migrating storage in large AI clusters is complex. A high-performance, scalable, and easy-to-manage unified storage foundation is essential.

CASE

Cloud service providers and enterprises building and operating ultra-large-scale AI computing clusters, aiming to interconnect hundreds of thousands of GPU cards into a single "supercomputer" to provide a computational foundation for trillion-parameter models.

-

Challenge

Data I/O Bottlenecks

Extremely High Stability Requirements

Complex Integration of Storage and Computing Systems -

Solution

Adopt DERA D8000 SSDs as the underlying data storage support for the cluster.

Extreme Performance: The D8000’s high throughput and low latency ensure continuous high-speed data loading, eliminating I/O bottlenecks and fully unleashing the computational power of hundreds of thousands of GPU cards.

Robust Reliability: Its enterprise-grade reliability, combined with the cluster’s "minute-level self-healing" mechanism, ensures continuous long-term training operations without interruption.

Seamless Integration: Deeply compatible with the computing system’s technology stack, providing an out-of-the-box, one-stop solution that simplifies deployment and maintenance. -

Benefit

Enhanced Efficiency: Shortens model training cycles and accelerates AI R&D iteration.

Business Assurance: Significantly improves training task success rates and reduces Total Cost of Ownership (TCO).

Solid Foundation: Works in synergy with computing and networking to provide a robust, high-performance data foundation for trillion-parameter models.